About us

AIGVE is a website hosting the documentations, tutorials, examples and the latest updates about the AIGVE library.

🚀 What is AIGVE?

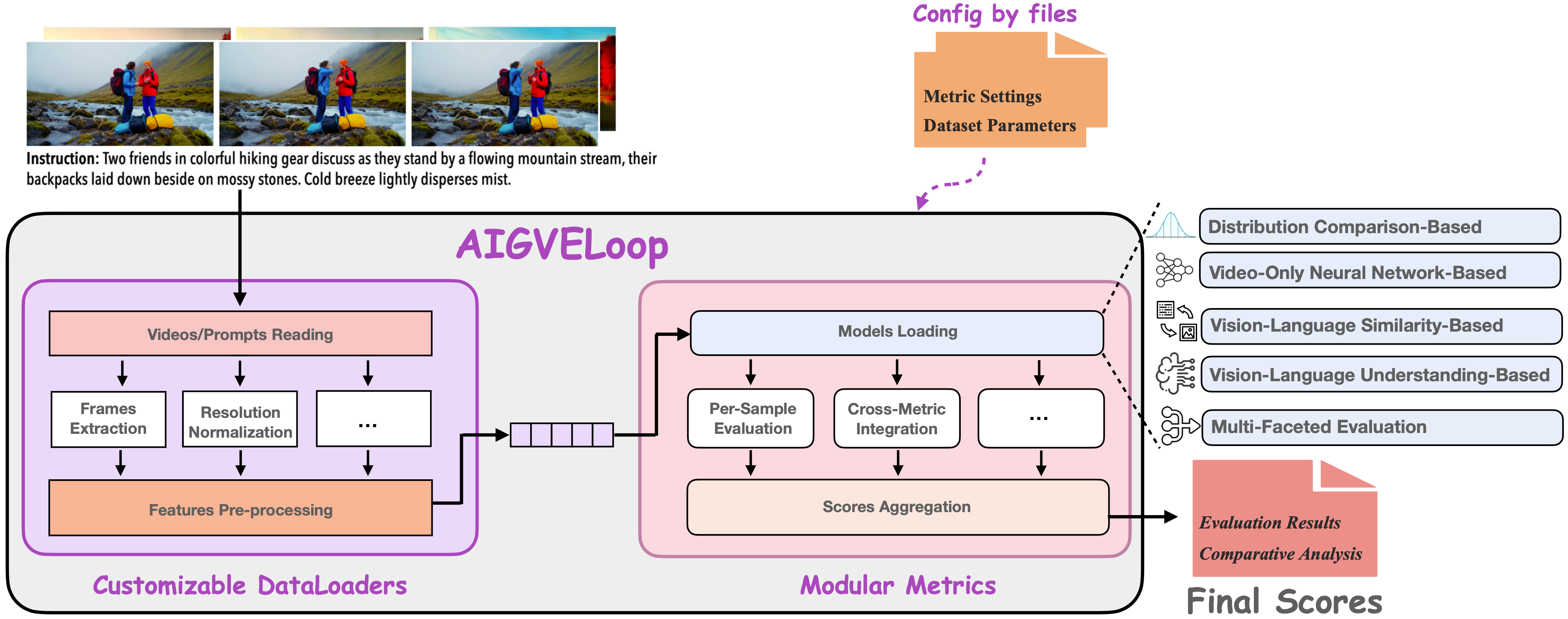

AIGVE (AI Generated Video Evaluation Toolkit) provides a comprehensive and structured evaluation framework for assessing AI-generated video quality developed by the IFM Lab. It integrates multiple evaluation metrics, covering diverse aspects of video evaluation, including neural-network-based assessment, distribution comparison, vision-language alignment, and multi-faceted analysis.

- Official Website: https://www.aigve.org/

- Github Repository: https://github.com/ShaneXiangH/AIGVE_Tool

- PyPI Package: https://pypi.org/project/aigve/

- AIGVE-Bench Full Dataset https://huggingface.co/datasets/xiaoliux/AIGVE-Bench

- IFM Lab https://www.ifmlab.org/

Citing Us

aigve is developed based on the AIGVE-Tool paper from IFM Lab, which can be downloaded via the following links:

- AIGVE-Tool Paper (2025): https://arxiv.org/abs/2503.14064

If you find AIGVE library and the AIGVE-Tool papers useful in your work, please cite the papers as follows:

@article{xiang2025aigvetoolaigeneratedvideoevaluation,

title={AIGVE-Tool: AI-Generated Video Evaluation Toolkit with Multifaceted Benchmark},

author={Xinhao Xiang and Xiao Liu and Zizhong Li and Zhuosheng Liu and Jiawei Zhang},

year={2025},

journal={arXiv preprint arXiv:2503.14064},

eprint={2503.14064},

archivePrefix={arXiv},

primaryClass={cs.CV},

url={https://arxiv.org/abs/2503.14064},

}

Library Organization

| Components | Descriptions |

|---|---|

aigve |

The library for assessing AI-generated video quality |

aigve.configs |

a library for parameter configuration and management |

aigve.core |

a library for video evaluation process design |

aigve.datasets |

a library for dataset loading design |

aigve.metrics |

a library for video evaluation metrics design and building |

aigve.utils |

a library for utility function definition |

Evaluation Metrics Zoo

Distribution Comparison-Based Evaluation Metrics

These metrics assess the quality of generated videos by comparing the distribution of real and generated samples.

- ✅ FID: Frechet Inception Distance (FID) quantifies the similarity between real and generated video feature distributions by measuring the Wasserstein-2 distance.

- ✅ FVD: Frechet Video Distance (FVD) extends the FID approach to video domain by leveraging spatio-temporal features extracted from action recognition networks.

- ✅ IS: Inception Score (IS) evaluates both the quality and diversity of generated content by analyzing conditional label distributions.

Video-only Neural Network-Based Evaluation Metrics

These metrics leverage deep learning models to assess AI-generated video quality based on learned representations.

- ✅ GSTVQA: Generalized Spatio-Temporal VQA (GSTVQA) employs graph-based spatio-temporal analysis to assess video quality.

- ✅ SimpleVQA: Simple Video Quality Assessment (Simple-VQA) utilizes deep learning features for no-reference video quality assessment.

- ✅ LightVQA+: Light Video Quality Assessment Plus (Light-VQA+) incorporates exposure quality guidance to evaluate video quality.

Vision-Language Similarity-Based Evaluation Metrics

These metrics evaluate alignment, similarity, and coherence between visual and textual representations, often using embeddings from models like CLIP and BLIP.

- ✅ CLIPSim: CLIP Similarity (CLIPSim) leverages CLIP embeddings to measure semantic similarity between videos and text.

- ✅ CLIPTemp: CLIP Temporal (CLIPTemp) extends CLIPSim by incorporating temporal consistency assessment.

- ✅ BLIPSim: Bootstrapped Language-Image Pre-training Similarity (BLIPSim) uses advanced pre-training techniques to improve video-text alignment evaluation.

- ✅ Pickscore: PickScore incorporates human preference data to provide more perceptually aligned measurement of video-text matching.

Vision-Language Understanding-Based Evaluation Metrics

These metrics assess higher-level understanding, reasoning, and factual consistency in vision-language models.

- ✅ VIEScore: Video Information Evaluation Score (VIEScore) provides explainable assessments of conditional image synthesis.

- ✅ TIFA: Text-Image Faithfulness Assessment (TIFA) employs question-answering techniques to evaluate text-to-image alignment.

- ✅ DSG: Davidsonian Scene Graph (DSG) improves fine-grained evaluation reliability through advanced scene graph representations.

Multi-Faceted Evaluation Metrics

These metrics integrate structured, multi-dimensional assessments to provide a holistic benchmarking framework for AI-generated videos.

- ✅ VideoPhy: Video Physics Evaluation (VideoPhy) specifically assesses the physical plausibility of generated videos.

- ✅ VideoScore: Video Score (VideoScore) simulates fine-grained human feedback across multiple evaluation dimensions.

- ✅ VBench: VBench provides a comprehensive benchmark by combining multiple aspects such as consistency, realism, and alignment into a unified scoring system.

Key Features

- Multi-Dimensional Evaluation: Covers video coherence, physics, and benchmarking.

- Open-Source & Customizable: Designed for easy integration.

- Cutting-Edge AI Assessment: Supports various AI-generated video tasks.

License & Copyright

Copyright © 2025 IFM Lab. All rights reserved.

AIGVEsource code is published under the terms of the MIT License.AIGVEdocumentation and theAIGVE-Toolpapers are licensed under a Creative Commons Attribution-Share Alike 4.0 Unported License (CC BY-SA 4.0).